Content index

Your app's content index is used by the OpenAI question component to provide specific context data that relates to a user's question or statement. This along with prompt template, allows the OpenAI models to generate comprehensive responses that are based on knowledge derived from your specific content.

The Meya OpenAI integration gives you a lot of control over how content items are sourced or imported, how they should be indexed, and added to your prompt. The default settings have been calibrated to give good response for most general Q&A interactions, but you might want to adjust the content index and prompt template to have a certain response tone, add more or less context.

How indexing works

The index is built from all the content items that you imported from your data sources or entered in manually. When you index your content items, the indexer will do the following:

- Batch process all your content items.

- Break each content item section into smaller chunks.

- These chunks are then added to your app's index.

Setting the chunk size

The primary control parameter for the content index is the chunk size. The chunk size is measured in terms of tokens (these are the standard OpenAI GPT-2 based tokens). A token can basically be seen as either a word or unique symbol, for example, the sentence "The girl skipped ahead" will be 4 tokens. Special characters such as punctuation, new lines etc. are also considered separate tokens.

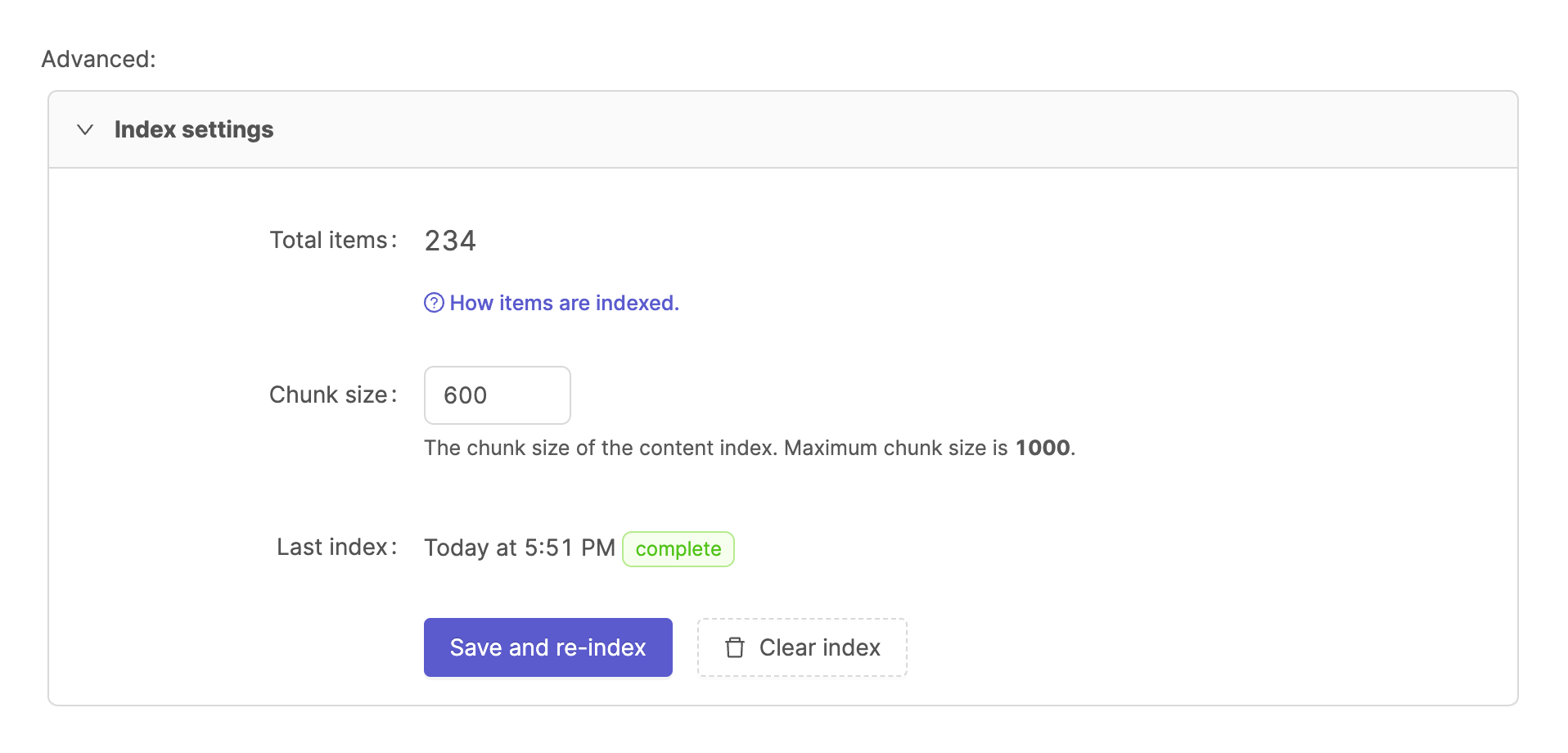

You can set your app's index chunk size in the OpenAI integration page.

- Open the Meya Console

- Select your app from the app list.

- Go to the Integrations page, and select the *OpenAI integrations.

- In the Settings tab, scroll down to the Advanced section.

- In the Index settings panel, you will find the Chunk size field.

The default chunk size is 600 tokens, which is good for most use cases. However, if you have a very large number of content items, you might want to reduce the chunk size and increase the question component's max_content_chunks field to add more chunks to your prompt. This will allow the bot to use more granular context from more sources which could produce better responses.

We encourage you to experiment with this setting as well as the temperature or topP values in your prompt's hyperparameters.

Updated 9 months ago