Setup

How to set up an OpenAI integration (beta)

This guide will walk you through the process of connecting your Meya app with OpenAI, importing and indexing your content, and setting up the question component to answer user questions based on your content.

Before you begin

You will need an OpenAI API key to setup the integration. For Meya dev apps, you have the option to use Meya's OpenAI API key, but for staging and production apps you will need to provide your own API key.

Getting your OpenAI API key

If you've not signed up for an OpenAI account, then you can do so here: https://platform.openai.com/signup

- Once you're in your account go to the API keys page

- Click on the + Create new secret key button.

- Copy & store the newly generated API key (do not loose this because it's only displayed once 🙂).

Setting up the OpenAI integration on Meya

- Open the Meya Console

- Select your app from the app list.

- (You can also create a new app using the OpenAI app template.)

- Go to the Integrations page.

- Find the OpenAI integration and click Add

- (If you've created your app use the OpenAI template, then this integration will already be available, in this case click Edit.)

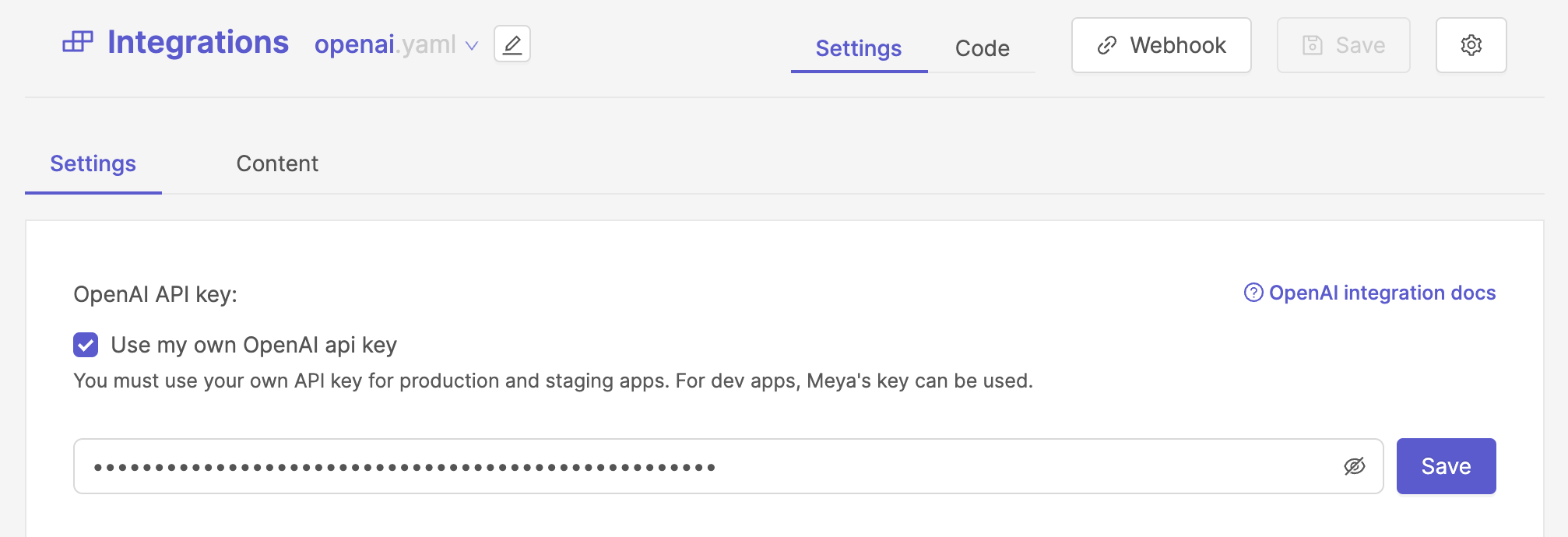

- If you are using your own API key, then check the Use my own OpenAI api key checkbox.

- (This won't be available for staging and production apps.)

- Paste your OpenAI API key into the checkbox, click the Save button.

- This will do a few things

- It validates your OpenAI API key by doing a test API call to OpenAI.

- It stores your API key your app's vault and creates the

openai.api_keyvault key. - It creates the

openai.yamlBFML file with theapi_keyfield referencing theopenai.api_keyvault key. You can check the BFML code by click on the Code tab.

- If you're using Meya's API key then you can just click the Save button on the top right.

Import some data

Next you will want to import some content that the OpenAI model can use to answer questions. There are a couple of data sources that you can explore, but for this guide we'll just use the Sitemap data source.

Let's create a new Sitemap data source:

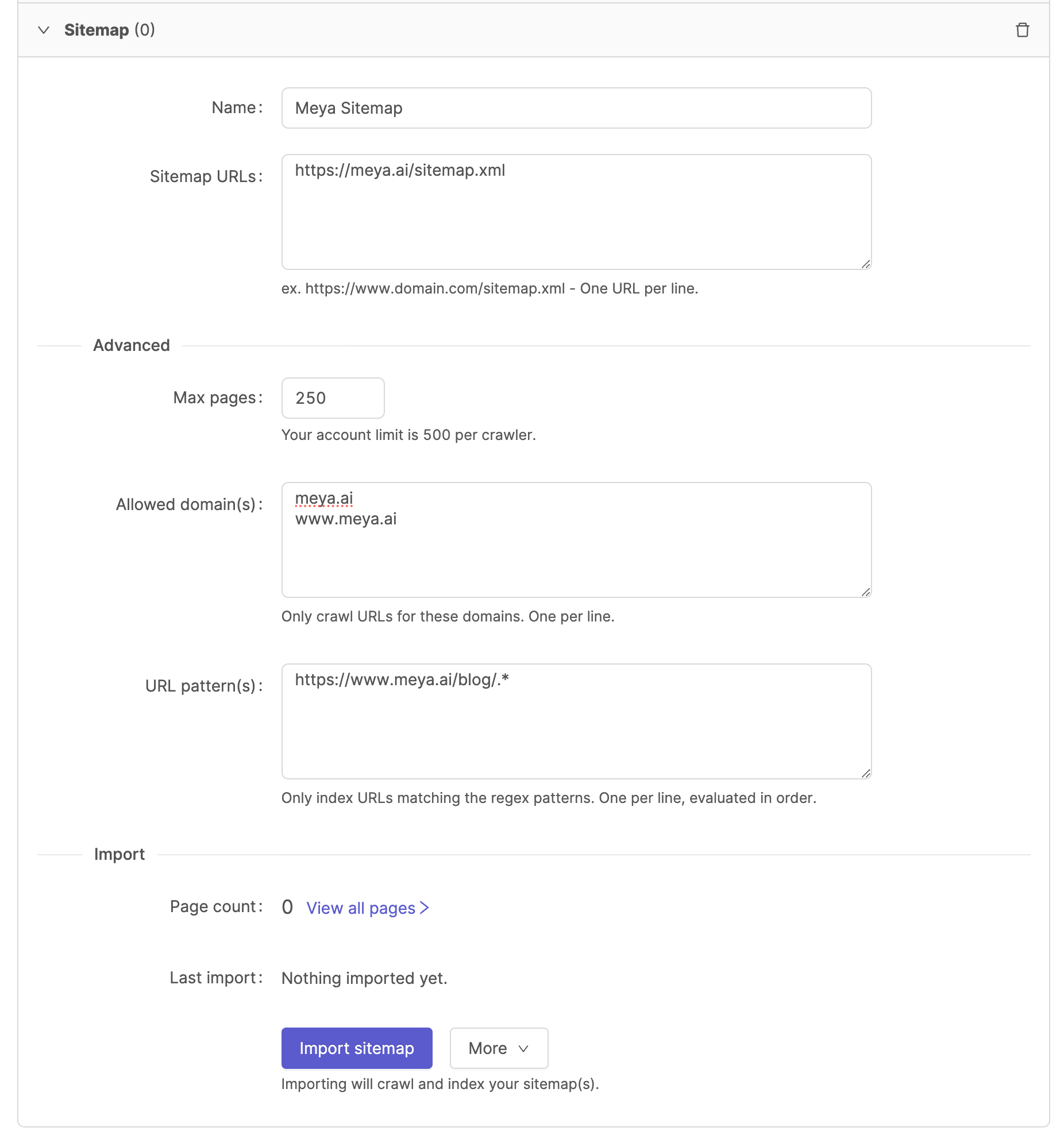

- In the OpenAI integration page, hover over the + Add data source button, and select the Sitemap option.

- Give your Sitemap data source a descriptive name, in this case we're use Meya Sitemap.

- Add your sitemap URLs in the Sitemap URLs text area.

- A page limit of 250 will produce good results.

- Optional settings:

- Allowed domains: some sitemaps will refer to other sitemaps or pages on other domains/sub-domains. We usually recommend restricting the data source to your main domain.

- URL patterns: Optionally add URL regex patterns to limit the imported pages, for example you might want to only import your blog articles.

- Click Import sitemap

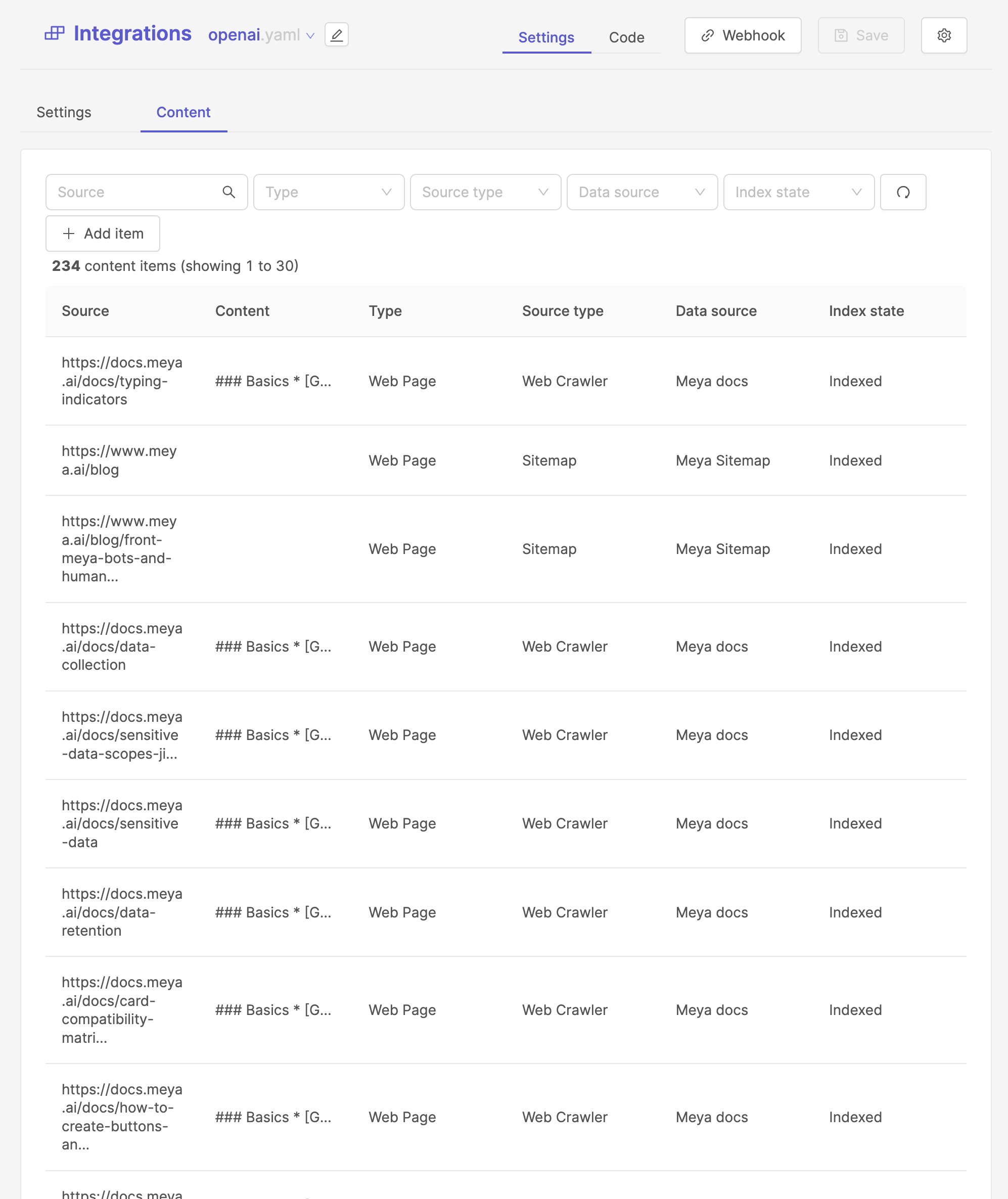

View your content items

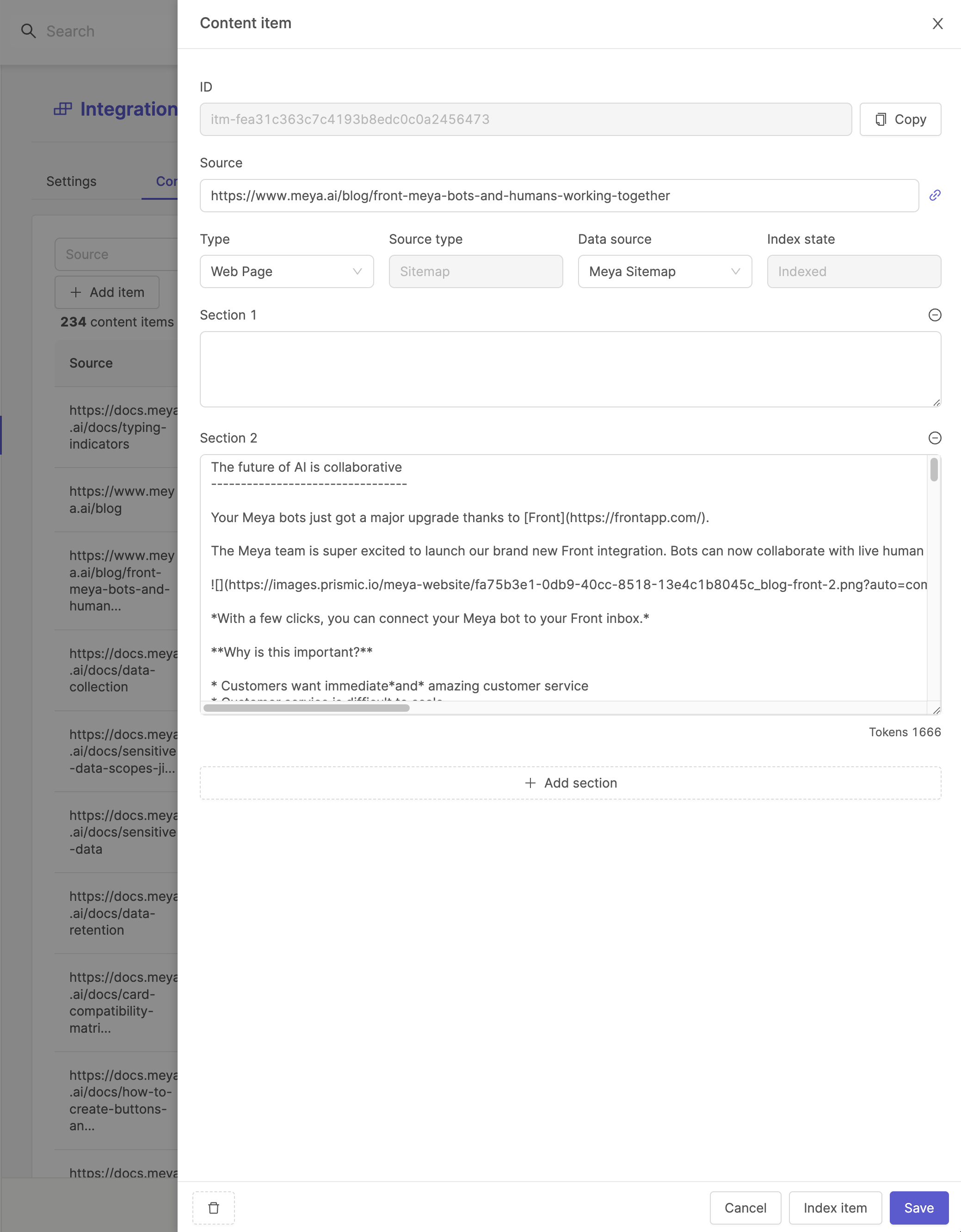

As the web crawler is downloading and importing your web pages, you can switch to the Content to monitor progress and view the imported data.

Every content item that is imported is fully editable, and you can view the content details by clicking on a row in the table. This will open a drawer with all the sections the web crawler has successfully parsed out.

Note that the content is automatically converted to markdown which is useful because the OpenAI models work well with markdown and markdown is supported by most messaging channels.

Add the question component to your BFML

question component to your BFMLIf you've created your app from the OpenAI template app, then this component is already configured for you in the flow/catchall.yaml flow file.

- Open the Meya Console

- Select your app from the app list.

- Go to the Flows page.

- Click the + Create flow button, and name the flow

catchall.yaml.- (If you already have a catchall flow then you can added the component there)

- Paste the following flow code into your flow file and click Save:

triggers:

- catchall

steps:

- flow_set:

question_entry_id: (@ flow.event.id )

question: (@ flow.event.data.text )

- typing: on

- question: (@ flow.question )

integration: integration.openai

max_tokens: 300

max_content_chunks: 8

- value: (@ flow.error )

case:

no_content:

jump: no_content

timeout:

jump: timeout

internal:

jump: error

default: next

- (answer)

- say: (@ flow.answer )

markdown: true

- end

- (no_content)

- say: Sorry, I don't know how to answer that. Can you rephrase it?

- end

- (timeout)

- say: Sorry, I'm currently under heavy load, could you please try again later?

- end

- (error)

- say: There was an error, our engineers are looking into it. Please try again later.

- endThis flow does a couple of things:

- It configures a text.catchall trigger that will fire when ever no other BFML flow handled the user input.

- It stores the user's question in flow scope.

- It turns on the typing indicator to signal to the user that the bot is working on a response.

- The

questioncomponent is run:

max_tokens: This is the maximum tokens that the OpenAI model will generate in it's response.max_content_chunks: The maximum number of content chunks to return from your indexed content that is most relevant to the user's question.- Depending on the model you use in your prompt template, the

questioncomponent will automatically include chat history, along with the content for context, and it will trim the prompt to fit within the models token limit.

- The

questioncomponent's response is evaluated and the flow proceeds to the relevant step.

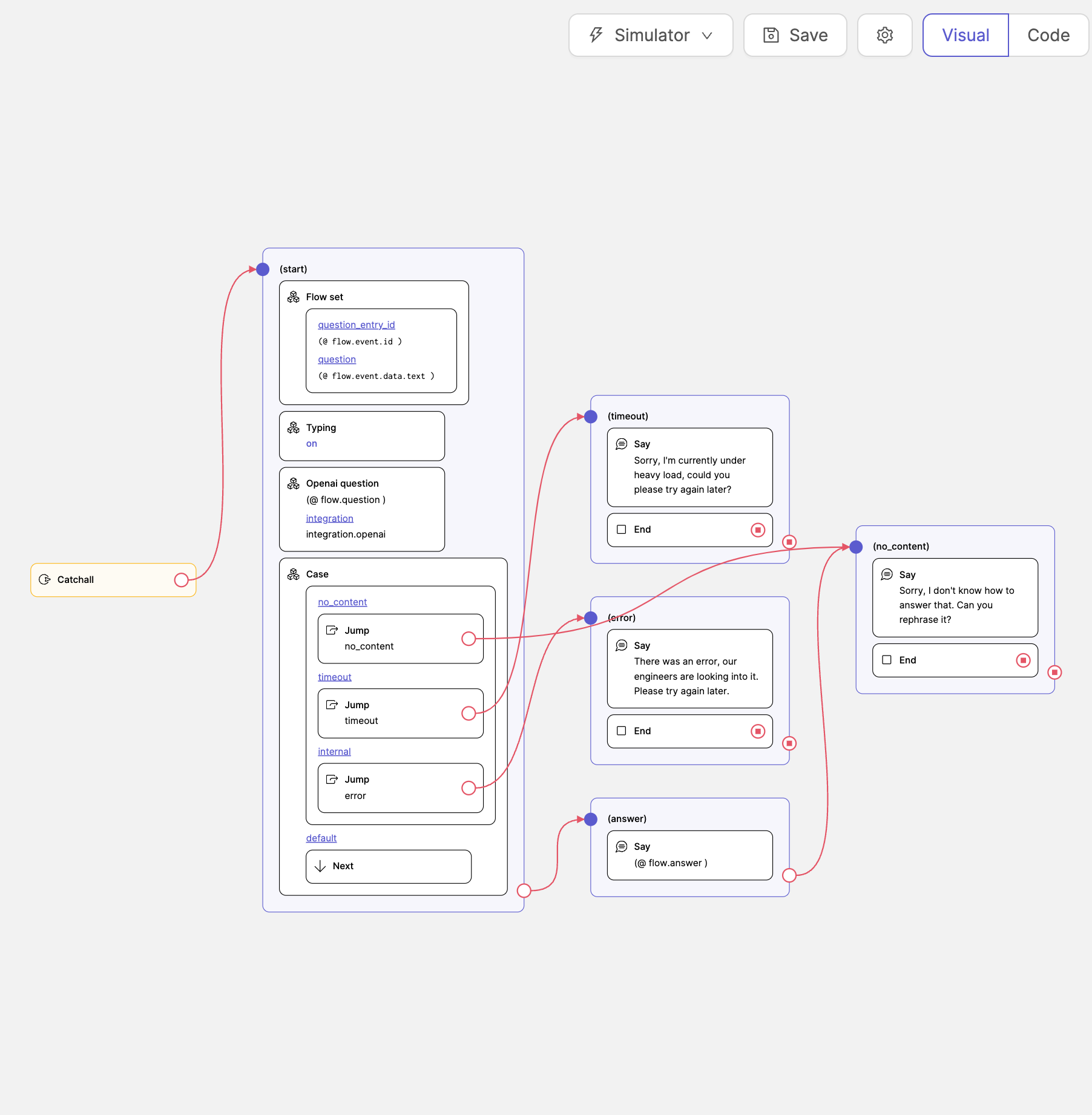

Here is a visual representation of the BFML flow:

Now head over to the Simulator page and ask your bot some questions, or if you are still in the BFML editor view then you can just click on the Simulator button at the top of the page.

Trouble shooting

Indexing is taking very long

Generally indexing a few hundred pages should only take a couple of minutes, however, if you are using Meya's OpenAI API key, then indexing could take longer due to high API usage.

Meya implements retries if the indexer is being rate limited by OpenAI, in this case we recommend you cancel the indexing job and use your own OpenAI API key.

Importing web data is taking forever

It's quite easy to run a very broad crawl with the Web crawler data source. If you do not constrain the crawler appropriately, then the crawler will easily follow links on other web sites that's not relevant to your content. To prevent your crawler from going down the wrong "rabbit holes", we recommend you do the following:

- Set the Allowed domains to only include the domains and sub-domains that are relevant to your content.

- Add Exclusion patterns to prevent the web crawler from following certain links.

- Add URL patterns to import and index matching pages.

The combination of Exclusion patterns and URL patterns can be very powerful, especially when you want the crawler to follow links more broadly (i.e. to "discover" more pages) but you're only interested in parsing certain pages.

The catchall flow is not run properly

The question component could technically fail for a number of reasons e.g. OpenAI timeout, index timeout, no content. In these cases it's useful to investigate your app's logs to debug the flow's execution. Meya provides a Logs page where you can query your app's logs with Meya's GridQL query language.

Updated 9 months ago