Sitemap

Import content items from a sitemap

Most websites will define a sitemap.xml file that contains links to all the pages a web crawler should crawl and index. This is probably the easiest and most reliable way to quickly import all your website's pages that contain information.

This data source allows you to provide a number of explicit sitemap URLs that the Meya web crawler will crawl and import. In addition to the sitemap URLs, the Meya web crawler will also try download the robots.txt file for each unique domain in the list of URLs parsed from all the sitemap URLs you provide.

In addition to providing the sitemaps, you can tune the behaviour of the Meya web crawler to only crawl certain domains and import certain URLs, this if often useful if you only want to import a subset of your website. The sections below will describe each field and how to use it in more detail

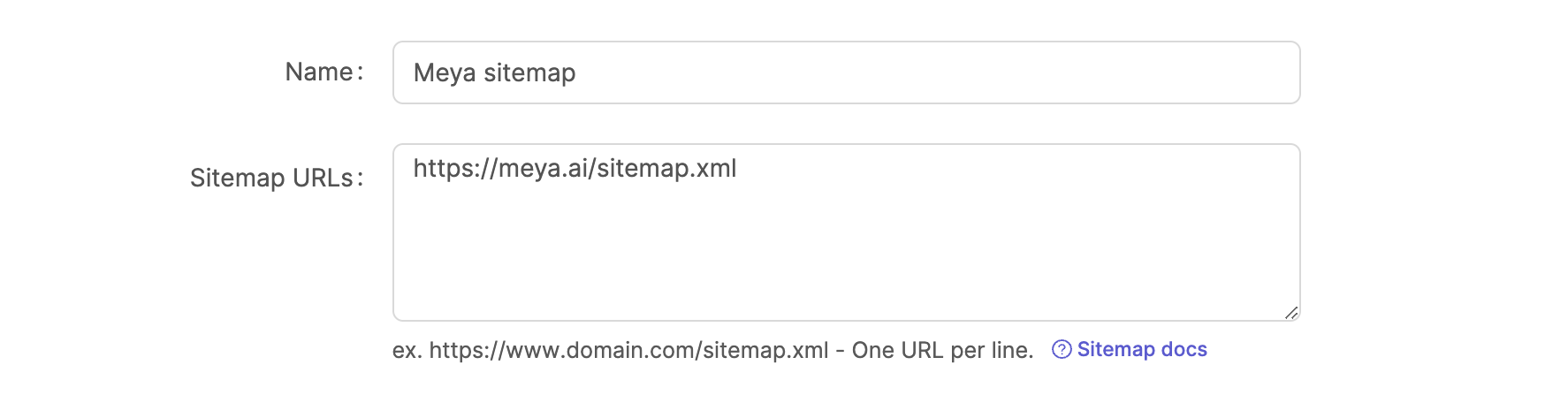

Sitemap URLs

This field contains a list of sitemap URLs that the Meya web crawler will download and parse. The Meya web crawler will then crawl and import each URL defined in the sitemaps.

For each URL the following rules are applied:

- The URL is ignored if it's been crawled already i.e. it's deduped.

- The URL is ignored if it's not in the Allowed domain(s) list.

- The URL is only imported if it matches one of the patterns in the URL pattern(s) list.

- The URL is ignored if it matches are rule defined in the domain's

robots.txtfile:- Each unique domain's

robots.txtfile is always evaluated. - If you wish the Meya web crawler to ignore the

robots.txtrules for a URL, then you can add a URL pattern to the Ignore robots.txt pattern(s) field.

- Each unique domain's

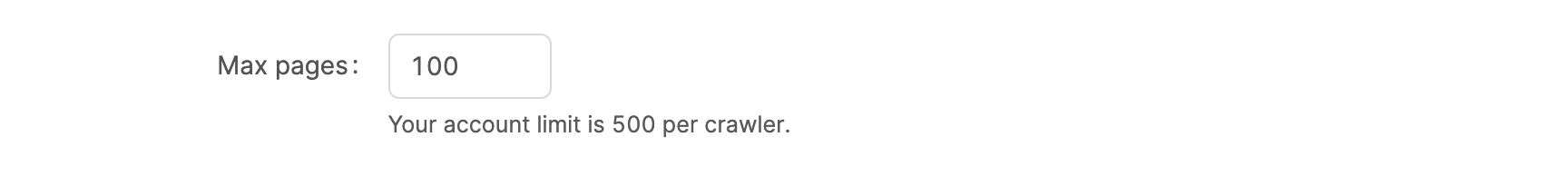

Max pages

This is the maximum pages that the Meya web crawler will import. Meya imposes a maximum page limit per crawler for your account, but you can set this to a lower amount if you want to hard limit the Meya web crawler.

Setting the max pages limit to a low limit e.g. 10 is useful if you want to test your configuration and see if the correct pages are imported before doing a full crawl and index.

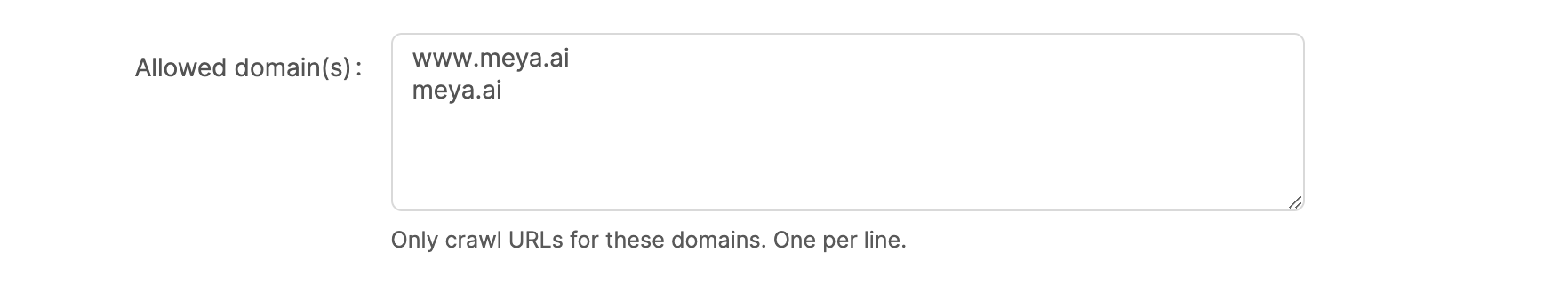

Allowed domains

It's very common that a website's sitemap will include URLs that are on other affiliated domains, for example, the sitemap might include the company's Facebook page. This allows you to restrict the Meya web crawler to only crawl URLs that match domains in the Allowed domain(s) field.

Note that these are the domain names only and not fully qualified URLs.

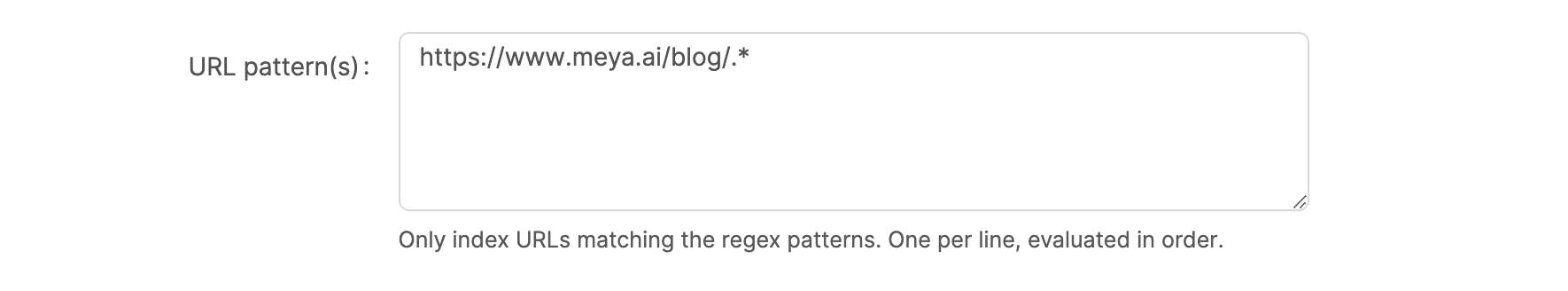

URL patterns

This allows you to define regex patterns to match specific URLs that you would like to be crawled and indexed.

Note that these regex patterns must be valid Python regex patterns. We recommend using the Pythex regex tool to help you write and test URL regex patterns.

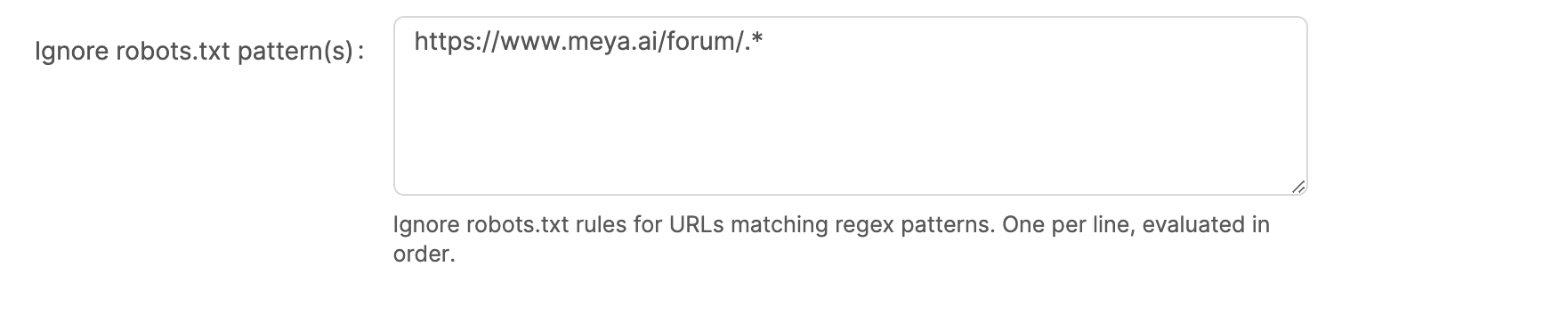

Ignore robots.txt patterns

By default, the Meya web crawler will download and evaluate the robots.txt file for each unique domain found in all the URLs parsed from your sitemaps. Before each URL is crawled its first evaluated against the rules defined in the domain's robots.txt file. It is best practice for a web crawler to always adhere to the robots.txt rules (in some cases the website might block the crawler if it ignores the robots.txt file), however, in some situations you might want to explicitly ignore the robots.txt rules for particular URLs.

This field allows you to provide a list of URL patterns that should ignore the robots.txt rules.

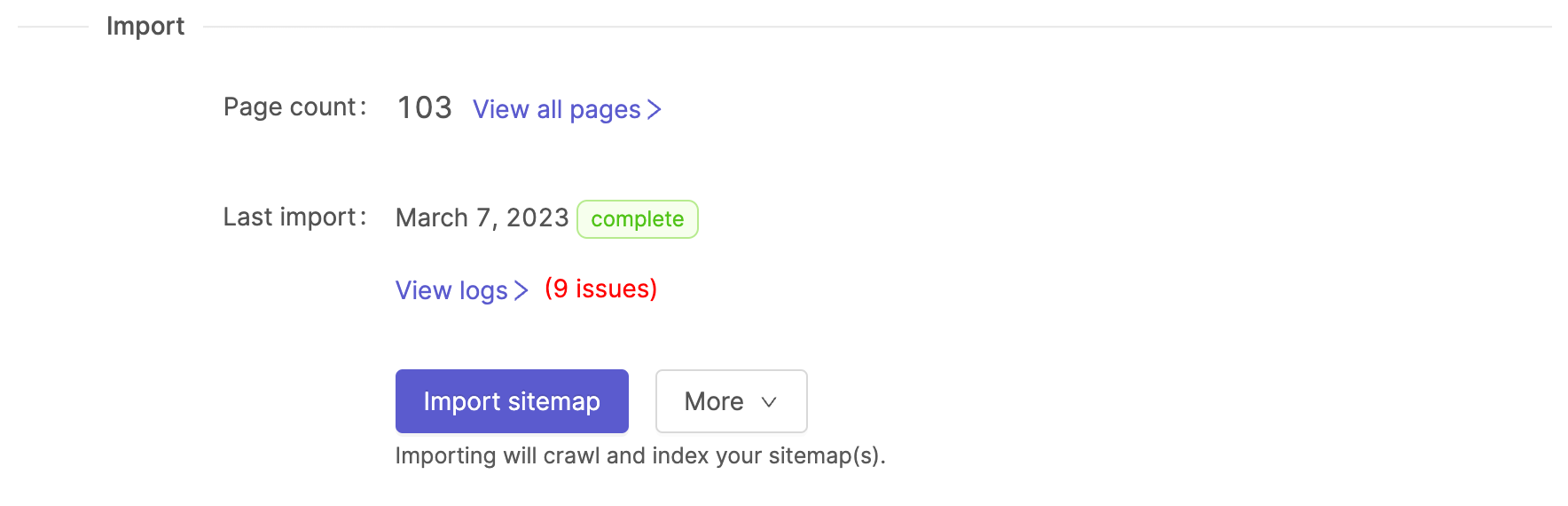

Import

Once you've configured your sitemap data source you can click on the Import sitemap button to start the Meya web crawler. A couple of things happen when you start the import process:

- The fields are validated and saved. The web crawler will not start if there are any configuration errors.

- The Meya web crawler will start crawling your sitemap URLs - this can take a couple of minutes.

- Once the Meya web crawler is done, it will automatically start the indexer to chunk and index all the newly imported content items.

Once the import job is complete, you can view all the imported content items by clicking on the View all pages link.

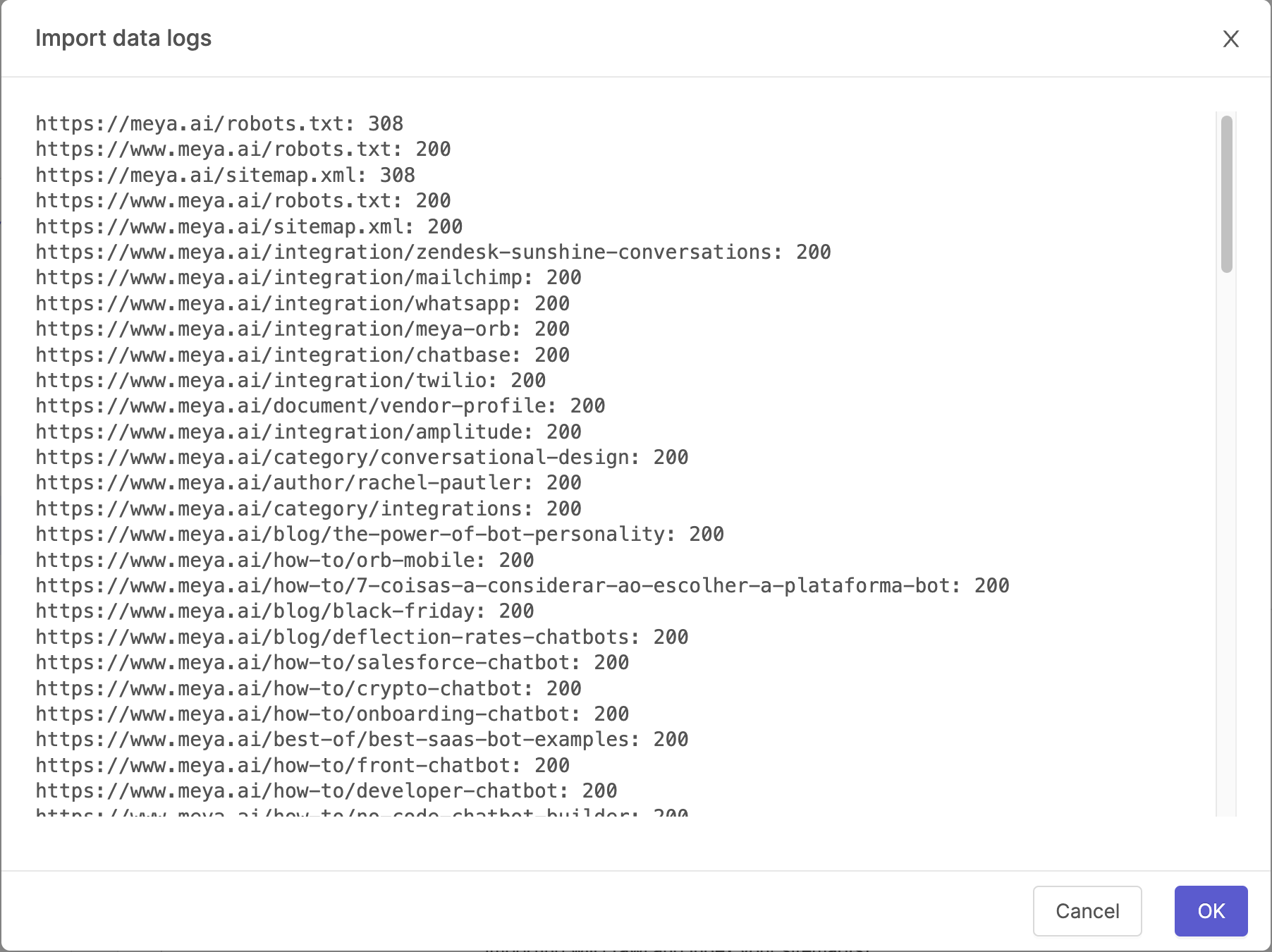

Import log

It is also common that the Meya web crawler could not crawl and import certain URLs - this could be due to a number of factors, for example, pages are missing, or there was a web server error, or the URL was blocked by robots.txt. You can view the Meya web crawler logs by clicking the View logs link.

The logs contain the URL that was crawled and the associated HTTP response status code, or an error message.

Updated 9 months ago