Input components

For inputting text and other data

Inputs are useful for asking your users for information. There are different types of inputs to use depending on your desired information. The complexity of an input can range from simple using input_string to somewhat complex using input_pattern that looks for a specific regex pattern or a more complex request like audio or video inputs.

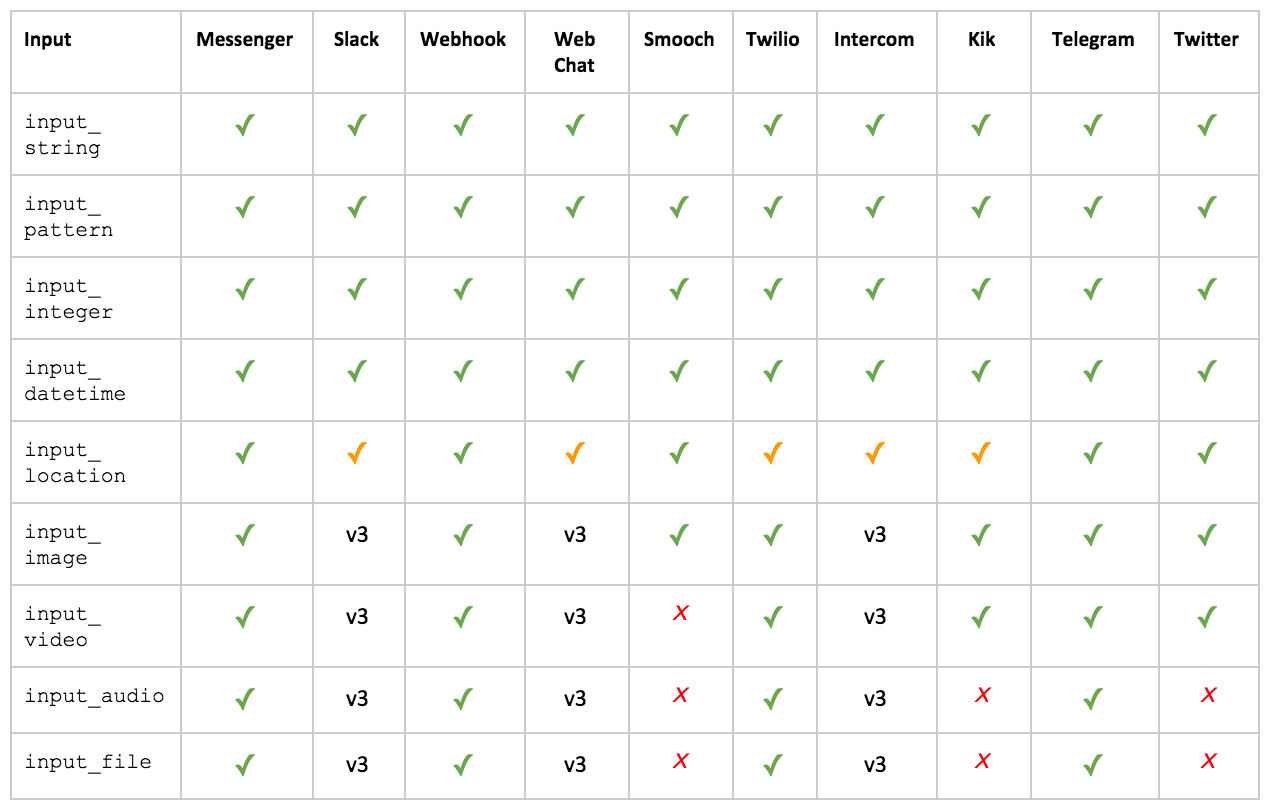

Input support matrix

Only certain messaging integrations support inputs other than text. See the support matrix below for more detail.

Inputs vs. intentsMost inputs have a corresponding intent with the same behavior and messaging integration compatibility. For example

input_imageis paired with theimagetrigger.

Input support matrix

meya.input_string

Optionally outputs text and waits for a response from the user. Matches any string.

Property | Description | |

|---|---|---|

| the text to output | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the data for subsequent steps in the flow | Optional. Default: |

| where to store the data. One of flow, user, bot. | Optional. Default: |

| If | Optional. Default: |

| If | Optional. Default: |

Transitions

next: the default transition for an answer (if not present, flow will transition to the next in state in sequence)

component: meya.input_string

properties:

text: "What's your middle name?"

output: middle_name

scope: userExample - Language Detection

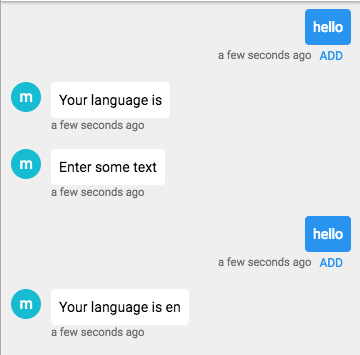

You can use the input_string component to detect a user's language. Start by creating a new flow and paste in the following code.

states:

first:

component: meya.text

properties:

text: "Your language is {{ flow.language }}"

input_state:

component: meya.input_string

properties:

text: "Enter some text"

detect_language: true

output: value

scope: flow

greeting:

component: meya.text

properties:

text: "Your language is {{ flow.language }}"Test it out in the test chat window. Entering hello will match the catchall trigger and start the flow. The bot will say Your language is since it hasn't yet detected your language. Enter hello again and the bot will respond Your language is en. Clear the messages to start over. This time try entering bonjour. The bot will respond Your language is fr.

meya.input_pattern

Matches a regex pattern for input. Properties are the same as meya.input_string with a few additions.

Property | Description | |

|---|---|---|

| the text to output | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the regex pattern to match the input to | Required |

| If | Optional. Default: true |

| the key used to store the data for subsequent steps in the flow | Optional. Default: value |

| where to store the data. One of flow, user, bot. | Optional. Default: flow |

| message sent to user if pattern is not matched | Optional. Default: Sorry, I don't understand. Try again. |

| If | Optional. Default: |

Transitions

next: the default transition for an answer (if not present, flow will transition to the next in state in sequence)

no_match: the state to transition to if require_match is false and the user inputs something that doesn't match the pattern.

component: meya.input_pattern

properties:

text: "What's your API key?"

pattern: ^(?P<capture_key>[0-9a-f]{32})$

output: api_key

require_match: true

scope: user

Regex capture groupsCapture groups are supported with

meya.input_pattern. For example,pattern: ^(?P<capture_key>[0-9a-f]{32})$will makecapture_keyavailable on the specified scope. Ex:{{ flow.capture_key }}If a named capture group does not match,

flow.<named_group>will equal the string 'None'.

The entire utterance can be accessed by reading the value of the output property.

meya.input_integer

Matches an integer value

Property | Description | |

|---|---|---|

| the text to output | |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| If | Optional. Default: true |

| the key used to store the data for subsequent steps in the flow | Optional. Default: value |

| where to store the data. One of flow, user, bot. | Optional. Default: flow |

| message sent to user if input was not an integer | Optional. Default: Sorry, I don't understand. Try again. |

| If | Optional. Default: |

Transitions

next: the default transition for an answer (if not present, flow will transition to the next in state in sequence)

no_match: the state to transition to if require_match is false and the user inputs something ambiguous

component: meya.input_integer

properties:

text: "How old are you?"

output: age

require_match: false

scope: user

transitions:

next: proper_answer

no_match: ambiguous_answermeya.input_datetime

Matches a "human-readable" date. Some examples that will match "now", "in 15 minutes", "tomorrow", "next wednesday at 10:30am", "9pm tonight", "October 20, 1978", "Oct. 20 at 12pm". The result is stored in Unix time (seconds).

Property | Description | |

|---|---|---|

| The text to output. | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| Which timezone to use for interpreting times. Entered as an Olson Database format. If not set, the component will attempt to read timezone from datastore. | Optional: Default: |

| What datastore scope to read the timezone. | Optional: Default: |

| If | Optional. Default: |

| If | Optional. Default: |

| The key used to store the data for subsequent steps in the flow. | Optional. Default: |

| Where to store the data. One of flow, user, bot. | Optional. Default: |

| Message sent to user if input was not a date or time. | Optional. Default: |

| If | Optional. Default: |

Transitions

next: the default transition for an answer (if not present, flow will transition to the next in state in sequence)

no_match: the state to transition to if require_match is false and the user inputs something ambiguous

A note about timezonesIf you don't specify a timezone and there is no timezone set for the user, Meya assumes GMT. Keep this in mind when using the data at a later date. Meya will do it's best to populate the user's time zone for you. However, we can't guarantee that it's available.

component: meya.input_datetime

properties:

text: "When is your birthday?"

output: birthdate

timezone: Canada/Easternmeya.input_image

Gets an image url uploaded by the user.

Supported on Messenger, Telegram, Kik and Smooch.

Property | Description | |

|---|---|---|

| the text to output | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the data | Optional. Default: |

| where to store the data. One of | Optional. Default: |

| message sent to user if input was not an image | Optional. Default: |

| If | Optional. Default: |

component: meya.input_image

properties:

text: "Send me a picture of your favorite food!"

output: food_image

scope: flowmeya.input_video

Gets a video url uploaded by the user.

Supported on Messenger, Telegram, Kik and Twilio

Property | Description | |

|---|---|---|

| the text to output to the user | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the video url | Optional. Default: |

| where to store the data. One of | Optional. Default: |

| message sent to user if input was not a video | Optional. Default: |

| If | Optional. Default: |

component: meya.input_video

properties:

text: "Send a video of your view!"

output: view_video

scope: flowmeya.input_audio

Gets an audio url uploaded by the user.

Supported on Messenger, Telegram and Twilio.

Property | Description | |

|---|---|---|

| the text to output to the user | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the audio url | Optional. Default: |

| where to store the data. One of | Optional. Default: |

| message sent to user if input was not an audio file | Optional. Default: |

| If | Optional. Default: |

component: meya.input_audio

properties:

text: "Send a recorded message of your order :)"

output: audio_url

scope: flowmeya.input_file

Gets a file url uploaded by the user.

Supported on Messenger, Telegram and Twilio.

Property | Description | |

|---|---|---|

| the text to output to the user | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the file url | optional - default: value |

| where to store the data. One of | optional - default: flow |

| message sent to user if input was not a file | Optional. Default: Sorry, I don't understand. Try again. |

| If | Optional. Default: |

component: meya.input_file

properties:

text: "Send a file of the doc"

output: file_url

scope: flowmeya.input_location

Gets and stores the location information about the user. Works with a text-based answer (e.g. Sunnyvale, CA) for all integrations or a location pin 📍 in the case of Telegram and Messenger.

SupportLocation pins 📍 are only supported for Telegram, Messenger and Smooch

Property | Description | |

|---|---|---|

| the text to output | Required |

| Text to speak to the user. This field also accepts SSML markup to customize pronunciation. | Optional |

| the key used to store the data entered by the user | Default: |

| the assumed confidence when matching. | Optional. Default: |

| If | Optional. Default: |

| message sent to user if input was not a location | Optional. Default: |

| If | Optional. Default: |

The data is stored to the user datastore. You can access the information found in the table below.

Datastore | Description |

|---|---|

| The latitude of the location. |

| The longitude of the location. |

| The timezone of the location. |

| The city of the location. |

| The state of the location. |

| The country of the location. |

Example of a flow using input location.

component: meya.input_location

properties:

text: "Where are you?"

output: locationUpdated 9 months ago